How is Data Quality Engineering Changing in 2022?

.jpg)

The data analytics industry is booming, but with the growth comes a shifting in roles. Traditionally, companies have mostly focused on collecting, cleaning and visualizing data.

There are a huge number, and variety, of tools on the market today that allow data quality engineers to do this - though some better than others. However, the challenges and discussions are shifting more to the left - to innovation in the way businesses transform and manage their data.

This next phase of the data analytics journey requires companies to redefine their goals and organizations' needs.

Efficiency, flexibility, accessibility and accuracy will be fundamental to allowing organizations to not just keep data clean and secure - but to drive competitive advantage.

Data Quality Engineering: A Fast-Evolving Discipline

Data quality engineering is a fast-evolving discipline underpinning the digital products of many businesses. Data quality engineering leaders need to keep up with the pace of emerging technologies, while at the same time extracting value from existing solutions. This places significant demands on their teams.

Software engineers are in a unique position to help bring agility and innovation to the workforce. Areas of focus for data quality engineers in 2022 include:

- Talent

- Security

- New software solutions

- Low code options and improved UI

- Managing business stakeholders

- New application development

- Modernizing legacy solutions.

Some of these areas complement each other, while others are in conflict. Data quality engineering leaders need to be armed with the right skills and knowledge to deliver customer value. They need to make sure that the teams and the applications they create become more resilient, adaptable and efficient.

Below are our top three predictions for changes in data quality engineering in 2022:

Prediction 1: Specialization Will Grow Within the Data Team

Typically, data analysts and engineers wear many different hats in their work. They are often found doing lots of different tasks. This is because the investment into the data team has only recently increased.

As the value of data teams becomes more evident and more investment is placed in this department, data teams will develop specialized skills, focused on a particular function.

This could mean having a reliable data engineer, a visualization lead and a separation between backend and frontend data engineering teams. With a new generation of data quality tools at their disposal, the requirement for data quality engineers to be adept at manually processing data will decrease.

This will allow each of these specialists to use these more sophisticated tools to assure a much greater percentage of the data - up to 100% - freeing up their time to focus on value-added activities such as data synthesis, predictive analysis, modeling and augmented intelligence.

We believe these kinds of organizational changes will continue to develop over the next few years.

Prediction 2: Data Quality Engineering Will Focus on Data at the Source

In 2022, we believe that data quality engineers will be focusing on data quality at the source because it is quite simply less expensive to do so, and you can catch problems before they get to the master data.

Because data quality engineering is primarily focused on data cleansing, identifying, and correcting inconsistent and incorrect data points, eliminating these at source avoids the multiplication effect when cleansing is taken as part of a transformation.

Recent data from Gartner confirms this. More than 70% of CIOs involved in digital transformations reflect that they wish data quality had been prioritized much earlier in the journey.

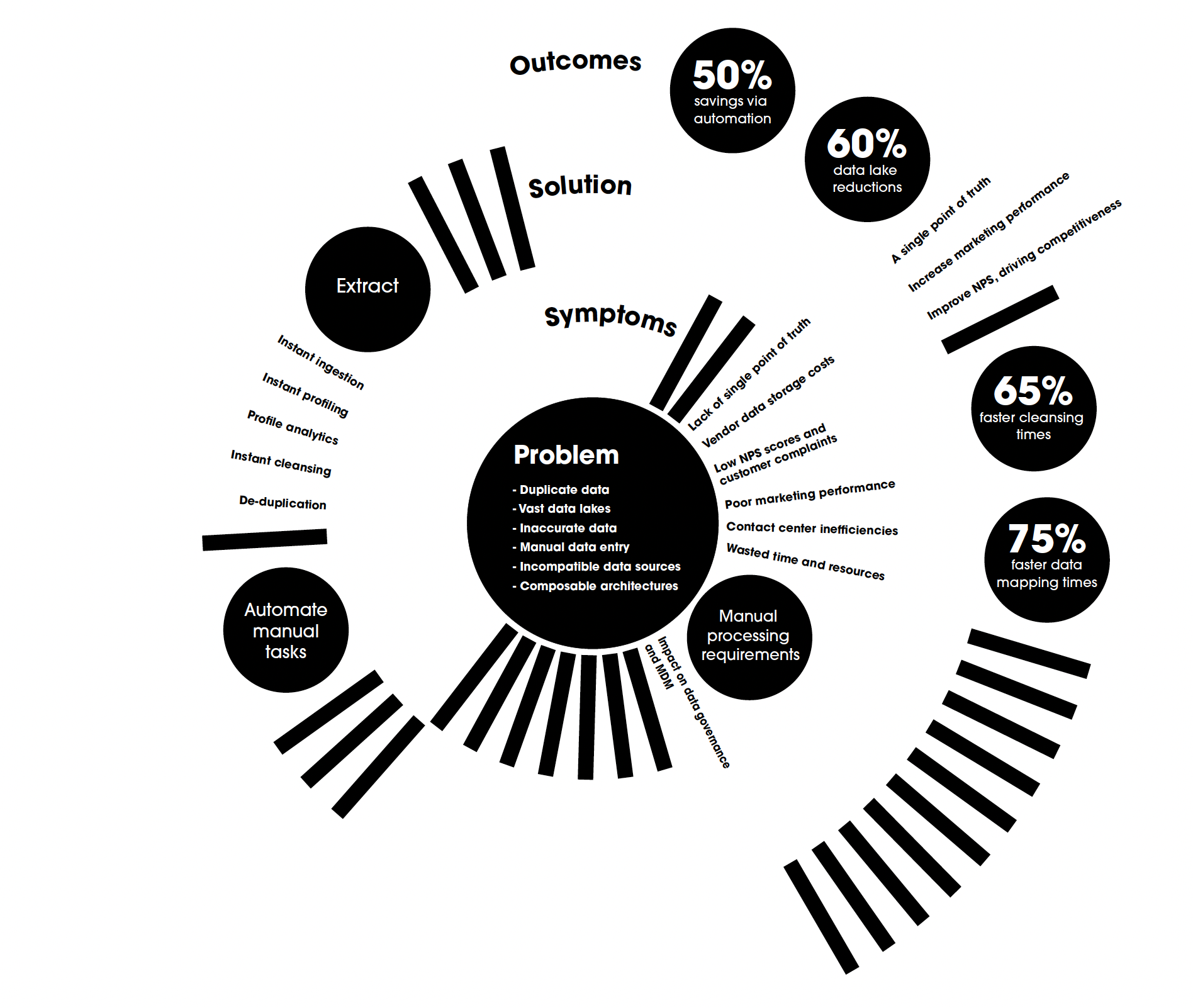

The evidence related to this approach is clear: the animation below shows just a few of the savings and quick wins that can be achieved by using a tool like IDS’s iData toolkit at the start of any transformation.

Prediction 3: The Shifting of Data Quality Engineering Functions in 2022

With the rise of AI-augmented tools, and a new generation of data quality solution that can process much larger volumes of data, more accurately, and in a shorter space of time, inevitably the role of the data quality engineer is shifting to the left, to take advantage of these shifts.

In 2022, the data quality engineering team will refocus on getting a better understanding of the data and what the trends and opportunities they spot, can add to the agility, security, and adaptiveness of the organization.

Software engineering leaders must use the right skills, tools, technologies, and practices to deliver great customer value. They should also be ensuring that their teams and the applications they deliver become more resilient, adaptable, and effective.

Conclusion

With the increasing sophistication of data quality tools, and the inclusion of AI and machine learning techniques, data quality engineers can turn their skills to added value activities.

There can no longer be an excuse for poor quality data, or for assuring just a tiny percentage of the data.

Augmented data management can not only help to tame unorganized data and ensure that it is maintained in line with corporate policies and procedures over time, but it creates a trusted source of business-critical information on demand to keep businesses agile in a competitive environment, which is essential to support sustainable, profitable growth.

IDS’s Kovenant™ methodology is an end-to-end approach that allows data quality engineers to focus on what matters, rather than on the actual process of cleaning, transforming and migrating data - it even includes a full suite of test data management tools, freeing data quality engineers to assure 100% of the data, whether as part of a transformation project, or a master data management strategy.

Learn how continuous testing can improve your business

IDS' Chief Technical Officer, James Briers, sheds light on the solutions to approaching complex data testing projects with mechanical efficiency.

.jpg?width=1200&name=IDS_Website-Image25_(1200x628).jpg)